Understanding MuLUT

Why MuLUT: SR-LUT and Its Limitation

An SR-LUT is obtained and deployed as the following.

1. Training an SR network on paired LR-HR dataset.

2. Caching the SR network via traversing all possible LR inputs and saving the corresponding HR results, obtainning a list of index-value pairs, i.e., a LUT.

3. Retrieving values from the LUT by querying give LR inputs.

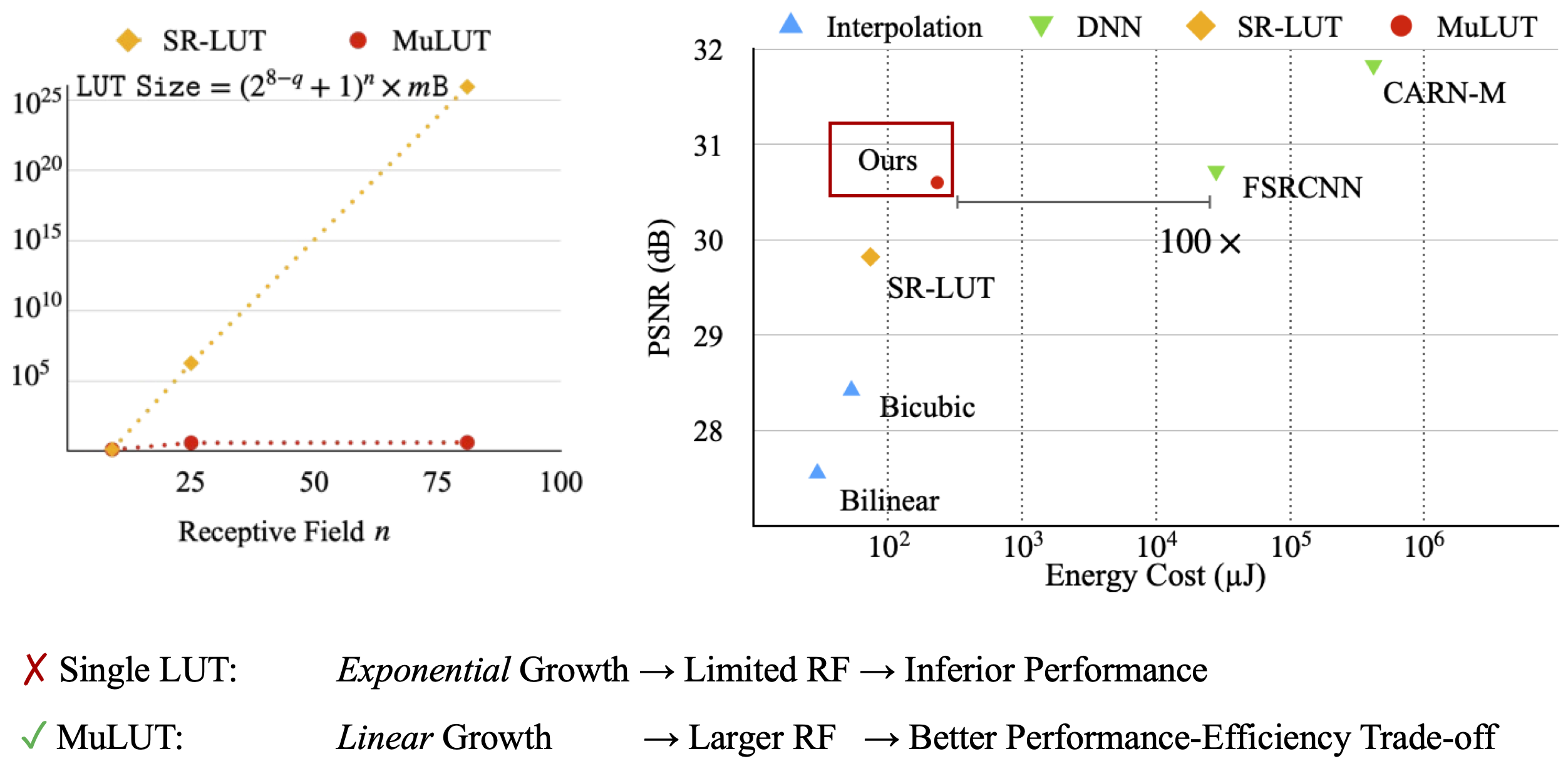

However, due to exhaustive calculation, the size of a LUT grows exponentially as the dimension of its indexing entry increases.

Thus, its receptive field (RF) has to be limited, resulting in inferior performance.